NodeBB Assets - Object Storage

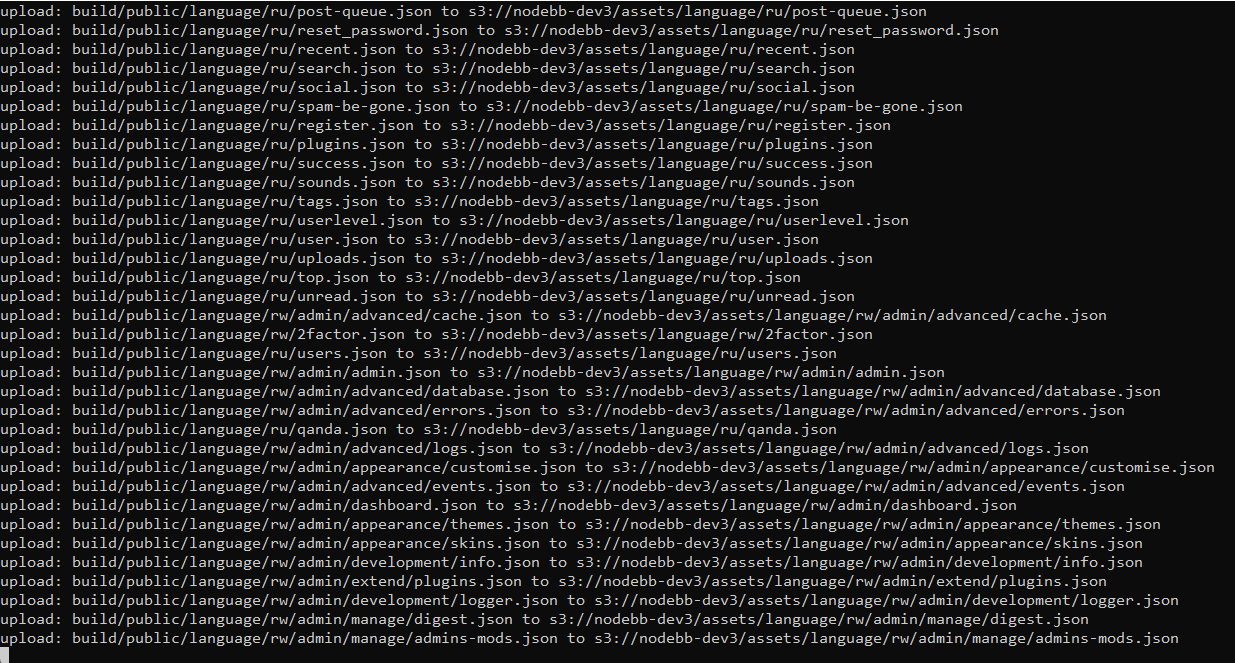

-

aws s3api list-objects-v2 --endpoint-url https://[account].r2.cloudflarestorage.com --bucket nodebb-dev3 | more{ "Contents": [ { "Key": "assets/acp.min.js.map", "LastModified": "2023-05-17T14:37:46.508Z", "ETag": "\"30d96ca6de60c70d482689de4f656784\"", "Size": 2115263, "StorageClass": "STANDARD" }, { "Key": "assets/acp.min.js", "LastModified": "2023-05-17T14:37:46.182Z", "ETag": "\"ac03fc028d895314c458a64783a5d2df\"", "Size": 617833, "StorageClass": "STANDARD" }, -

{ "Contents": [ { "Key": "assets/03371bf1d5cbb1eab58e3a0130d1e9c2.js", "LastModified": "2023-05-17T17:37:30.453Z", "ETag": "\"733f46933105eaf011a37d00a964aa39\"", "Size": 502502, "StorageClass": "STANDARD" }, { "Key": "assets/10822.4553fc0a94f5e4017c9e.min.js", "LastModified": "2023-05-17T17:37:29.881Z", "ETag": "\"e825b3003d09c6acaef0960484c1cd43\"", "Size": 742, "StorageClass": "STANDARD" }, { "Key": "assets/10943.83723e39cc9dae85e2e7.min.js", "LastModified": "2023-05-17T17:37:29.880Z", "ETag": "\"a14d728a69056d99f40de4e9d009c14f\"", "Size": 1149, "StorageClass": "STANDARD" }, { "Key": "assets/11307.fd4abf5601dce6fcfaf8.min.js", "LastModified": "2023-05-17T17:37:30.211Z", "ETag": "\"b81d4a241111819396108c44b1932acc\"", "Size": 327, "StorageClass": "STANDARD" }, { "Key": "assets/11393.036b406d0bb56dc3b1c4.min.js",A lot more output here, but I think you get the picture.

It looks like everything is being minified into single files?

-

just copy a single file as a test

aws s3 cp ./package.json s3://nodebb-dev3/assets/folder1/folder2/package.json --endpoint-url https://[account].r2.cloudflarestorage.com upload: ./package.json to s3://nodebb-static/assets/folder1/folder2/package.json aws s3 ls s3://nodebb-dev3/assets/folder1/folder2/package.json --endpoint-url https://[account].r2.cloudflarestorage.co m 2023-05-17 12:48:58 7920 package.json -

@phenomlab Hmm - perhaps not. I think you still need

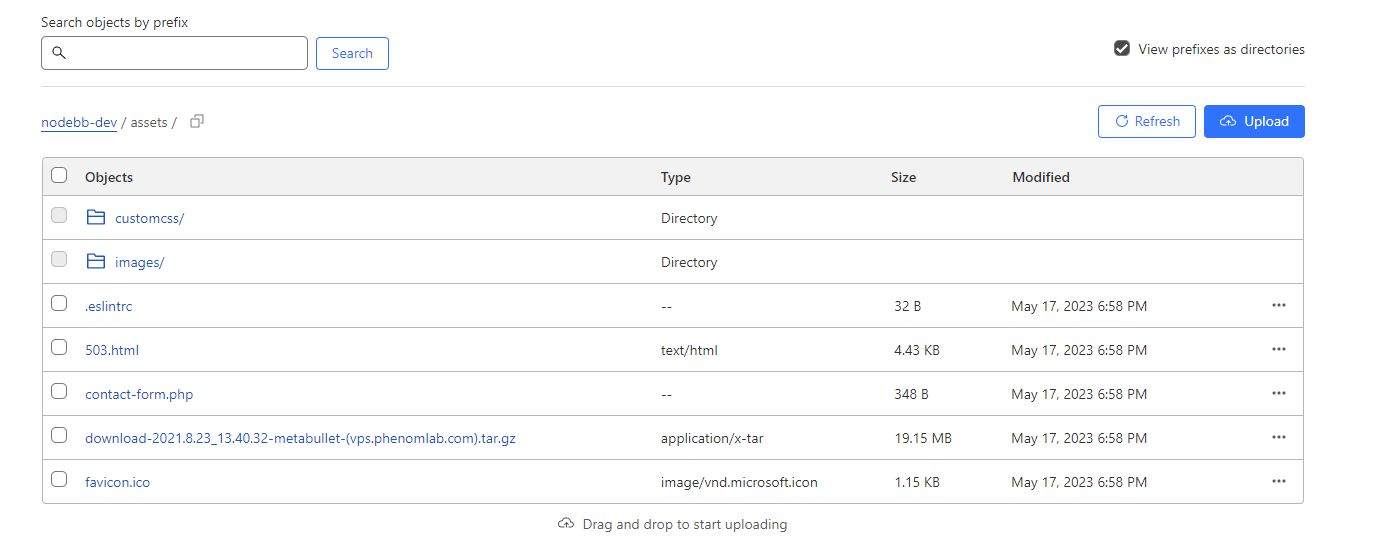

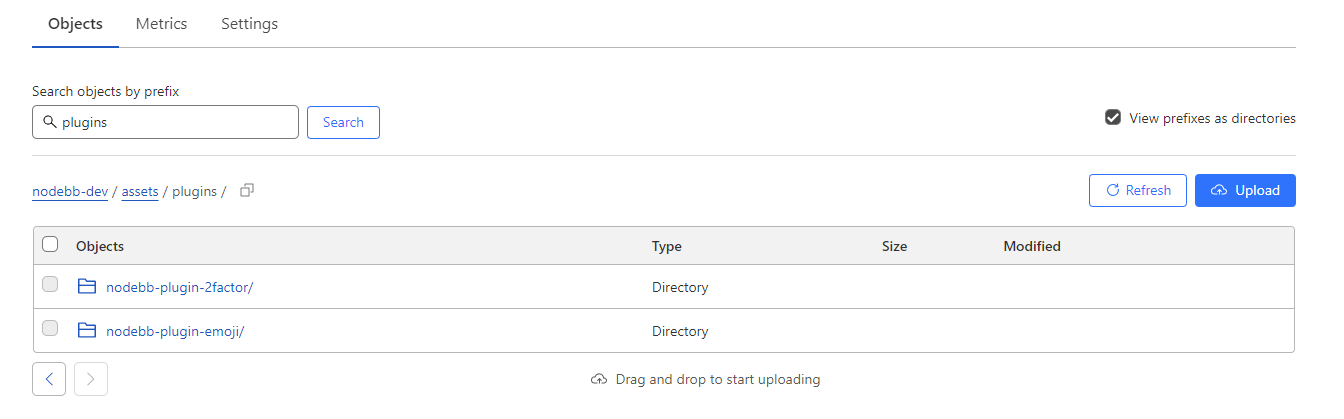

./build/public/which I am pulling back in now.EDIT - seems the files are indeed there

However, there is an issue with pulling the files on request.

-

so, the script can be modified as

async function syncStaticFiles() { const folders = ['./build/public', './public'] await Promise.all(folders.map(async folder => { await sync(folder, 's3://nodebb-static/assets', { monitor, maxConcurrentTransfers: 1000, commandInput: { ACL: 'public-read', ContentType: (syncCommandInput) => mime.lookup(syncCommandInput.Key) || 'text/html' } }); })); process.exit() } -

@razibal Right.... I think I have it working now with those modifications

Running some tests, but all looking good. Will test overnight...

Running some tests, but all looking good. Will test overnight...This has a lot of potential using workers and R2, and really needs proper documentation. As you know, it's taken me 4 hours to get this working!

When I run

/nodebb buildI don't see anything else running afterwards - should it be calling that other script automatically? -

-

@phenomlab said in NodeBB Assets - Object Storage:

This has a lot of potential using workers and R2, and really needs proper documentation. As you know, it's taken me 4 hours to get this working!

Yeah, it only took me a few minutes to get it working as a POC this morning, but , as you experienced, getting that even partially operationalized took a lot longer.

The nice thing about implementing this is that it makes nodeBB essentially infinitely scalable. All static assets are handled by cloudflare workers while the nodeBB API can be deployed to droplets behind a load balancer. Spin up droplets as demand increases and then spin them back down when no longer needed.

-

@phenomlab said in NodeBB Assets - Object Storage:

@razibal this can probably also be extended to services outside of Cloudflare and AWS.

Thats correct, this would work with any CDN that provides object storage and a mechanism to proxy a path like

/assetsvia cloud workers or CDN proxy service -

I thought I'd come back here and post my findings with this so far. My DEV instance is using R2 along with CF workers, and works as expected. Speed is very good.

The production environment uses the traditional method or serving critical assets on site access, but also uses Redis and 3 NodeBB processes (clustering). There is no discernible difference in the speed of the two environments which I find both pleasing, and yet surprising!

I'm performing more tests, and will provide an update here.