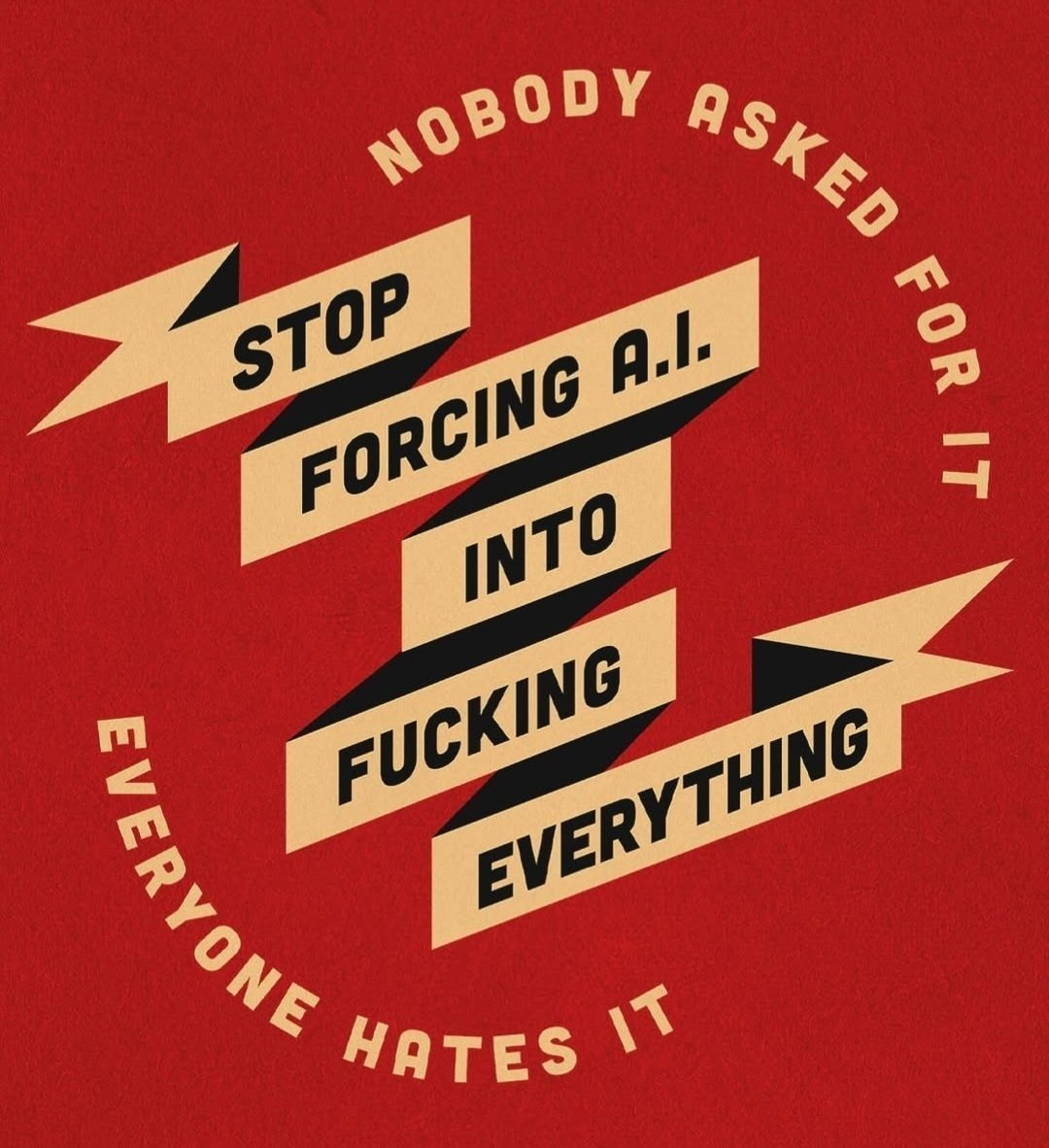

AI needs to stop

-

[email protected]replied to [email protected] last edited by

the people who matter, love it.

read "shareholders"

-

[email protected]replied to [email protected] last edited by

Yeah, it's a lot like piss in a river. I just drink around the piss.

-

[email protected]replied to [email protected] last edited by

I suspect that this is "grumpy old man" type thinking, but my concern is the loss of fundamental skills.

As an example, like many other people I've spent the last few decades developing written communication skills, emailing clients regarding complex topics. Communication requires not only an understanding of the subject, but an understanding of the recipient's circumstances, and the likelihood of the thoughts and actions that may arise as a result.

Over the last year or so I've noticed my assistants using LLMs to draft emails with deleterious results. This use in many cases reduces my thinking feeling experienced and trained assistant to an automaton regurgitating words from publicly available references. The usual response to this concern is that my assistants are using the tool incorrectly, which is certainly the case, but my argument is that the use of the tool precludes the expenditure of the requisite time and effort to really learn.

Perhaps this is a kind of circular argument, like why do kids need to learn handwriting when nothing needs to be handwritten.

It does seem as though we're on a trajectory towards stupider professional services though, where my bot emails your bot who replies and after n iterations maybe they've figured it out.

-

Containerize everything!

Crypto everything!

NFT everything!

Metaverse everything!

This too shall pass.

-

I think most can agree that AI has some great use cases, but I also think most people dont want AI in their damn toaster.

-

[email protected]replied to [email protected] last edited by

a rite of passage for your work to become so liked by others that they take your ideas,

ChatGPT is not a person.

People learn from the works they see [...] and that is what AI does as well.

ChatGPT is not a person.

It's actually really easy: we can say that chatgpt, which is not a person, is also not an artist, and thus cannot make art.

The mathematical trick of putting real images into a blender and then outputting a Legally Distinct

one does not absolve the system of its source material.

one does not absolve the system of its source material.but are instead weaving it into their process to the point it is not even clear AI was used at the end.

The only examples of AI in media that I like are ones that make it painfully obvious what they're doing.

-

That's so fucking weird wtf. Do you work for Elon Musk or something lmao

-

[email protected]replied to [email protected] last edited by

Oh yeah, I'm pretty worried about that from what I've seen in biochemistry undergraduate students. I was already concerned about how little structured support in writing science students receive, and I'm seeing a lot of over reliance on chatGPT.

With emails and the like, I find that I struggle with the pressure of a blank page/screen, so rewriting a mediocre draft is immensely helpful, but that strategy is only viable if you're prepared to go in and do some heavy editing. If it were a case of people honing their editing skills, then that might not be so bad, but I have been seeing lots of output that has the unmistakable chatGPT tone.

In short, I think it is definitely "grumpy old man" thinking, but that doesn't mean it's not valid (I say this as someone who is probably too young to be a grumpy old crone yet)

-

[email protected]replied to [email protected] last edited by

when we're in the midst of another bull run.

Oh, that's nice. So, who's money are you rug pulling?

-

[email protected]replied to [email protected] last edited by

Then they may as well say they did it "with computers."

Oh, but that's not sexy, is it. -

[email protected]replied to [email protected] last edited by

Cars and airplanes do have 3D printed parts. They're much more common in the prototyping phase, but they are used in production and are making their way to space.

I completely agree with your general sentiment though. Any time a new piece of technology shows promise there are a ton of people who will loudly proclame that it will completely replace <old and busted technology> in <a massive amount of areas> while turning a blind eye to things like scaling and/or practical limitations.

See also: low/no code, which has roots going back to the 1980s at least.

-

[email protected]replied to [email protected] last edited by

No lie, I actually really love the concept of Microsoft Recall, I've got the adhd and am always trying to retrace my steps to figure out problems i solved months ago. The problem is for as useful as it might be it's just an attack surface.

-

[email protected]replied to [email protected] last edited by

I mean, no, it isn't. It is a marketing decision after all.

That doesn't mean that type of thing isn't the product of AI research. -

What's wrong with containers?

-

[email protected]replied to [email protected] last edited by

CEOs get FOMO. They can get funding for their companies if they share "new, exciting innovations" for their products and AI is that - even if it's forcefeed in fit.

-

[email protected]replied to [email protected] last edited by

Not sure about that hot take. Containers are here for the long run.

-

[email protected]replied to [email protected] last edited by

Rule 34 clearly states everything must fuck everything. No exceptions. AI will be forced into fucking everything!

-

[email protected]replied to [email protected] last edited by

One of my favorite examples is "smart paste". Got separate address information fields? (City, state, zip etc) Have the user copy the full address, clock "Smart paste", feed the clipboard to an LLM with a prompt to transform it into the data your form needs. Absolutely game-changing imho.

Or data ingestion from email - many of my customers get emails from their customers that have instructions in them that someone at the company has to convert into form fields in the app. Instead, we provide an email address (some-company-inbound@ myapp.domain) and we feed the incoming emails into an LLM, ask it to extract any details it can (number of copies, post process, page numbers, etc) and have that auto fill into fields for the customer to review before approving the incoming details.

So many incredibly powerful use-cases and folks are doing wasteful and pointless things with them.

-

[email protected]replied to [email protected] last edited by

It literally makes my job easier. Why would I hate it LMAO

-

[email protected]replied to [email protected] last edited by

Forcing AI into everything maximizes efficiency, automates repetitive tasks, and unlocks insights from vast data sets that humans can't process as effectively. It enhances personalization in services, driving innovation and improving user experiences across industries. However, thoughtful integration is critical to avoid ethical pitfalls, maintain human oversight, and ensure meaningful, responsible use of AI.