How to hace nice URLs?

-

@darkpollo @baris NodeBB just runs

slugify()which is fairly restrictive... I believe it only allows for the latin alphabet for broadest compatibility even though URL paths can contain a broader set of UTF-8 chars.The problem here is we "slugify" strings for two reasons:

- URL Safety and Readability, so invalid characters are removed and a "human-readable" slug is produced

- Compatibility, so when data is sent across to other sites, the chance of the same data coming out the other side is increased.

I think the solution here is to refactor

slugifyso that there are two types, one for url safety and readability, and another one for compatibility. -

Slugify doesn't remove the special characters @darkpollo mentioned.

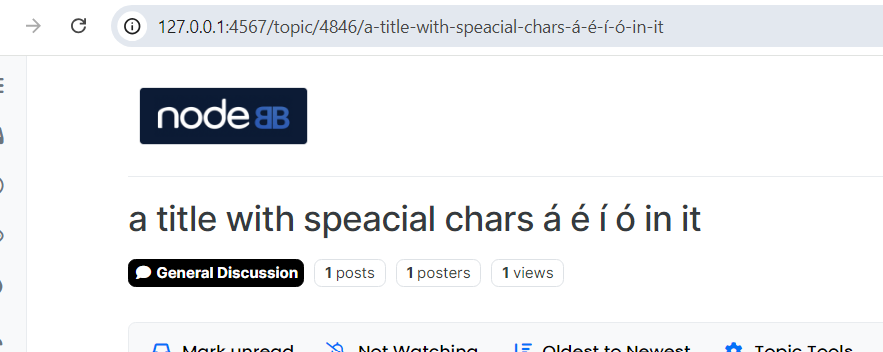

const s = await app.require('slugify'); console.log(s('a title with speacial chars á é í ó in it')) a-title-with-speacial-chars-á-é-í-ó-in-itThe issue I saw was they were encoded if the entire url from the address bar is copied.

-

@baris

Yes, I understand, but we need them to be removed so the urls are nice.

What @julian suggest of having an option for this seems great.

For what I see on slugify, they have an option for this.

https://www.npmjs.com/package/slugifystrict: false, // strip special characters except replacement, defaults to

falseThis is how is made in WordPress (sorry for the reference but this is where I am coming from... ) https://developer.wordpress.org/reference/functions/remove_accents/

And from what I can see in the code:

https://github.com/NodeBB/NodeBB/blob/45eabbf5ba2100201fe887a9d6fc81d88379d414/src/slugify.js#L2It is supposed to strip those as well.

https://github.com/NodeBB/NodeBB/blob/45eabbf5ba2100201fe887a9d6fc81d88379d414/public/src/modules/slugify.js#L23function string_to_slug(str) { str = str.replace(/^\s+|\s+$/g, ''); // trim str = str.toLowerCase(); // remove accents, swap ñ for n, etc var from = "àáäâèéëêìíïîòóöôùúüûñç·/_,:;"; var to = "aaaaeeeeiiiioooouuuunc------"; for (var i=0, l=from.length ; i<l ; i++) { str = str.replace(new RegExp(from.charAt(i), 'g'), to.charAt(i)); } str = str.replace(/[^a-z0-9 -]/g, '') // remove invalid chars .replace(/\s+/g, '-') // collapse whitespace and replace by - .replace(/-+/g, '-'); // collapse dashes return str; }So maybe this is a bug?

I think to make nice urls and slugs, all should be replaced...

-

@darkpollo looks like we used replace accented characters with regular ones but it was changed to support unicode slugs long time ago, https://github.com/NodeBB/NodeBB/commit/53caa5e422b986aa6e4a6e7350267777ad5741a5, not sure if reverting back would break stuff @julian

-

@baris Thanks.

It seems that when that was changed, we lost that ability. Maybe just updating slugify could work?

We are using a 11 years old slugify version...

Or just add back this:

// remove accents, swap ñ for n, etc var from = "àáäâèéëêìíïîıòóöôùúüûñçşğ·/_,:;"; var to = "aaaaeeeeiiiiioooouuuuncsg------"; for (var i = 0, l = from.length; i < l; i++) { str = str.replace(new RegExp(from.charAt(i), 'g'), to.charAt(i)); }Also I have been reading the forum and I found many post about this, most are from non-English languages.

I think this is something should be addressed and fixed but of course we do not want to break anything. -

You can open an issue on our github, it is likely a breaking change because it would alter old slugs, for example if a user had a userslug of

àáäâi would becomeaaaaafter this change, same for categories, groups. So it isn't a simple change of just updating the slugify function.