How to reduce the load?

-

@gotwf I will be fun with DoS

Anyway, seems crawler bots.

Yes, I get the same as you;

# nginx -T| grep -i worker_connect nginx: the configuration file /etc/nginx/nginx.conf syntax is ok nginx: configuration file /etc/nginx/nginx.conf test is successful worker_connections 1024; # nginx -T| grep -i worker_process nginx: the configuration file /etc/nginx/nginx.conf syntax is ok nginx: configuration file /etc/nginx/nginx.conf test is successful worker_processes auto;I will test increasing nodes, but still thinking how to cache the output.

Thanks -

@normando Wondering if this will help. I use caching over at https://sudonix.com and it's set as follows

server { listen x.x.x.x; listen [::]:80; server_name www.sudonix.com sudonix.com; return 301 https://sudonix.com$request_uri; access_log /var/log/virtualmin/sudonix.com_access_log; error_log /var/log/virtualmin/sudonix.com_error_log; } server { listen x.x.x.x:443 ssl http2; server_name www.sudonix.com; ssl_certificate /home/sudonix/ssl.combined; ssl_certificate_key /home/sudonix/ssl.key; return 301 https://sudonix.com$request_uri; access_log /var/log/virtualmin/sudonix.com_access_log; error_log /var/log/virtualmin/sudonix.com_error_log; } server { server_name sudonix.com; listen x.x.x.x:443 ssl http2; access_log /var/log/virtualmin/sudonix.com_access_log; error_log /var/log/virtualmin/sudonix.com_error_log; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto https; proxy_set_header Host $http_host; proxy_set_header X-NginX-Proxy true; proxy_redirect off; # Socket.io Support proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; gzip on; gzip_min_length 1000; gzip_proxied off; gzip_types text/plain application/xml text/javascript application/javascript application/x-javascript text/css application/json; location @nodebb { proxy_pass http://127.0.0.1:4567; } location ~ ^/assets/(.*) { root /home/sudonix/nodebb/; try_files /build/public/$1 /public/$1 @nodebb; add_header Cache-Control "max-age=31536000"; } location /plugins/ { root /home/sudonix/nodebb/build/public/; try_files $uri @nodebb; add_header Cache-Control "max-age=31536000"; } location / { proxy_pass http://127.0.0.1:4567; } add_header X-XSS-Protection "1; mode=block"; add_header X-Download-Options "noopen" always; add_header Content-Security-Policy "upgrade-insecure-requests" always; add_header Referrer-Policy 'no-referrer' always; add_header Permissions-Policy "accelerometer=(), camera=(), geolocation=(), gyroscope=(), magnetometer=(), microphone=(), payment=(), usb=()" always; add_header X-Powered-By "Sudonix" always; add_header Access-Control-Allow-Origin "https://sudonix.com https://blog.sudonix.com" always; add_header X-Permitted-Cross-Domain-Policies "none" always; fastcgi_split_path_info ^(.+\.php)(/.+)$; ssl_certificate /home/sudonix/ssl.combined; ssl_certificate_key /home/sudonix/ssl.key; rewrite https://sudonix.com/$1 break; if ($scheme = http) { rewrite ^/(?!.well-known)(.*) https://sudonix.com/$1 break; } } -

@normando said in How to reduce the load?:

Anyway, seems crawler bots.

Do you have the logs? Is it primarily googlebot? It will come in like a thundering herd of thunderin' hoovers. Does not respect conventions, e.g. robots.txt, but rather requires you to sign up for their webmaster tools. Anyways, back to the relevance, if not, then googlebot will basically scale to suck up as much as it can as fast as it possibly can. Hence, if you are running NodeBB on a VM in some reasonably well connected data center, network bandwidth is unlikely to be the bottleneck, leaving the vm itself.

The googlebot comes in fast, but also leaves fast. Several times a day, at +/- regular intervals depending on site activity and its mystery algos. So if you are seeing more off the wall bots, at random times 'twixt visits, then maybe these bots warrant further investigation. Otherwise, not much you can do about goog bot. But maybe give it more resources and it can leave sooner.

Have fun!

-

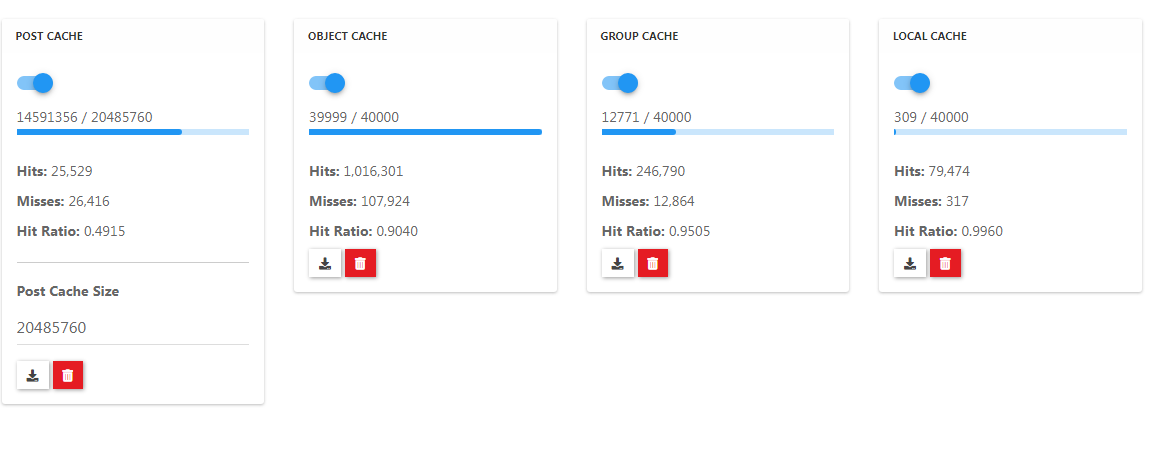

Another quick thought: What is ACP->Advanced->Cache telling you about your hit ratios, eh?

Have fun!

-

-

@phenomlab said in How to reduce the load?:

@julian said in How to reduce the load?:

HTTP 420 Enhance Your Calm

Lenina Huxley....And that made me think of Brave New World, which I realize wasn't your intention, but now I want to read that book again...

used as a rate-limiting mechanism.

used as a rate-limiting mechanism.