Unsolicited opinion: If #Mastodon wants to be a part of the #Fediverse that encourages small #selfhosted instances to be part of the whole, then it has to be a lean system able to be installed on second-hand/hand-me-down hardware and it should run reas...

-

@jerome @EdwinG @chris I'm clearly biased here but we'd say that it's rather the opposite: https://www.elastic.co/blog/elasticsearch-opensearch-performance-gap

-

Chris Alemany🇺🇦🇨🇦🇪🇸replied to Philipp Krenn last edited by

@xeraa thanks! I’m running a strait install on Debian. Can you point to some configuration options for the Heap size?

-

-

Philipp Krennreplied to Chris Alemany🇺🇦🇨🇦🇪🇸 last edited by

@chris yes: https://www.elastic.co/guide/en/elasticsearch/reference/current/advanced-configuration.html

1. create a file in /etc/elasticsearch/jvm.options.d/ with the custom config (this will survive upgrades; don't change the jvm.options file directly)

2. try something like 700m as a starting point. you might be able to go a bit lower. or with more activity you might need to go higher. there are details on the setting page for Xmx + Xms — this is what you want

-

-

Chris Alemany🇺🇦🇨🇦🇪🇸replied to Philipp Krenn last edited by

@xeraa @jerome @EdwinG this is really wonderful that you're here Philipp. Your advice is really interesting and opposite to what I've seen elsewhere. My old iMac (2008) has 16GB of RAM, I had been told previously to look at allocating 25-50% of the RAM to Java which translates to a mammoth 8GB. I'm going to try cutting it right down to 1GB and see what happens.

What are some metrics or tools I can use to determine if the RAM it is using is suitable? -

Philipp Krennreplied to Chris Alemany🇺🇦🇨🇦🇪🇸 last edited by

@chris @jerome @EdwinG so there is on and off heap (mostly caching) memory needed; normally you'd split it into equal parts. our smallest cloud instance has 1GB of memory and 500MB of heap

your use-case might need more (depending on how hard you push it). but just to get started you should be able to go with 1GB total or maybe even a little less

and I'd start with the JVM memory pressure to debug: https://www.elastic.co/guide/en/elasticsearch/reference/current/high-jvm-memory-pressure.html -

@chris @jerome @EdwinG the main pain you'll experience is that by default elasticsearch assumes it should use the entire machine / VM / container. so it allocates 50% of the memory for its heap. if you have a 16GB instance, it doesn't automatically need 8GB for the heap. but you need to tell it what you feel is appropriate

-

Chris Alemany🇺🇦🇨🇦🇪🇸replied to Philipp Krenn last edited by

-

Chris Alemany🇺🇦🇨🇦🇪🇸replied to Philipp Krenn last edited by

@xeraa Thanks again for your help.

I set it down to

-Xms1g

-Xmx1g

elasticsearch reports: heap size [1gb], compressed ordinary object pointers [true]I have a fairly active account, but my little instance is relatively quiet as there are only three of us on it.

I had originally set the heap size to Xmx/Xms2G as recommended in the Mastodon docs. But maybe that's too much for my purposes and I should have reduced rather than increased its memory.

The log seems very quiet, but no obvious errors that I can see.

-

Chris Alemany🇺🇦🇨🇦🇪🇸replied to Philipp Krenn last edited by

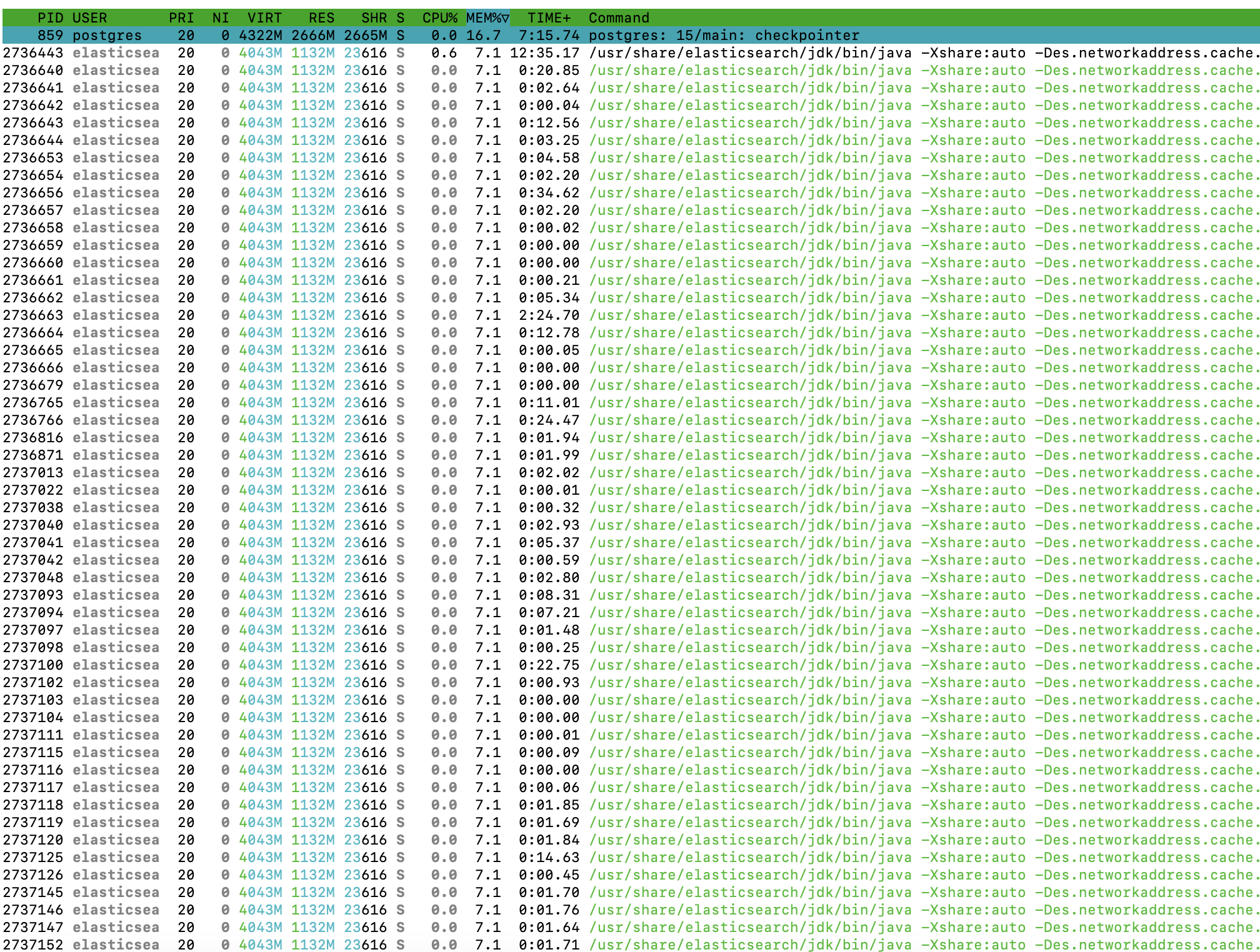

@xeraa I'm not sure setting it to a lower value has done much good. This is the report from htop. There are 50+ processes from elasticsearch all claiming 7.1% memory which obviously adds to more than 100% so I'm thinking this is causing some serious issues here. The system is very sluggish.