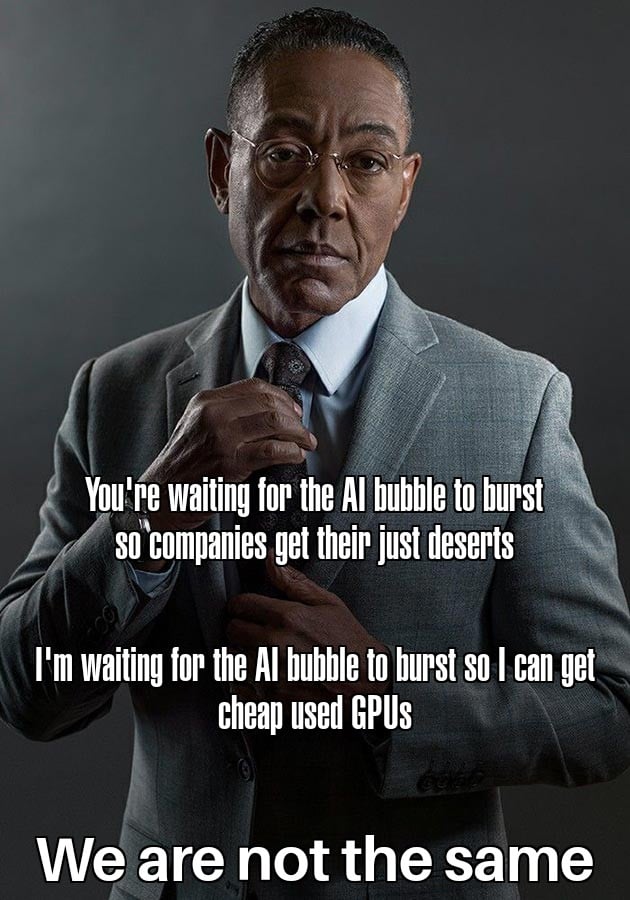

Can't wait!

-

[email protected]replied to [email protected] last edited by

Unfortunately, this time around the majority of AI build up are GPUs that are likely difficult to accomodate in a random build.

If you want a GPU for graphics, well, many of them don't even have video ports.

If your use case doesn't need those, well, you might not be able to reasonably power and cool the sorts of chips that are being bought up.

The latest wrinkle is that a lot of that overbuying is likely to go towards Grace Blackwell, which is a standalone unit. Ironically despite being a product built around a GPU but needing a video port, their video port is driven by a non-nvidia chip.

-

[email protected]replied to [email protected] last edited by

Silverlight, what are you doing here? Go on, get outta here!

-

[email protected]replied to [email protected] last edited by

You're more likely to see the college bubble burst before AI. Colleges are struggling with low admissions.

-

[email protected]replied to [email protected] last edited by

This was my understanding as well - that miners often underclock their GPUs rather than overclock them.

-

[email protected]replied to [email protected] last edited by

I want the hype bubble to burst because I want them to go back to making AI for useful stuff like cancer screening and stop trying to cram it into my fridge or figure out how to avoid paying their workers, and the hate bubble isn't going to stop until that does.

-

[email protected]replied to [email protected] last edited by

My use case is for my own AI plans as well as some other stuff like mass transcoding 200TB+ of..."Linux ISOs" lol. I already have power/cooling taken care of for the other servers I'm running

I've already got my gaming needs satisfied for years to come (probably)

-

[email protected]replied to [email protected] last edited by

I never said that they can't serve a function, just that corporations like openai have a vested interest inflating their potential impact on the job market.

-

[email protected]replied to [email protected] last edited by

GPU prices are gonna get cheaper, annnnyyyy day now folks, any day now

-

[email protected]replied to [email protected] last edited by

Stop. My penis can only get so erect.

-

[email protected]replied to [email protected] last edited by

"I made an AI powered banana that can experience fear of being eaten and cuss at you while you do."

-

[email protected]replied to [email protected] last edited by

That's what I'm saying since 2020, people don't have patience any more.

-

Onlyflans

-

[email protected]replied to [email protected] last edited by

I'll buy a dozen and build my own AI farm tyvm.

it would be amazing to have several models running and have them interact together for some real immersive DnD rp.

-

[email protected]replied to [email protected] last edited by

I dumped my old af GPU for more than I paid for it because people have no chill.

-

[email protected]replied to [email protected] last edited by

There may be a dip in prices for a bit, but since covid, more companies have realized they can get away with manufacturing fewer units and selling them for a higher price.

-

This is fine🔥🐶☕🔥replied to [email protected] last edited by

to be able to search through all my documents with nothing but a sentence or idea of what I'm looking for

Like this?

DocFetcher - Fast Document Search

Homepage of DocFetcher, a desktop search application for fast document retrieval

(docfetcher.sourceforge.io)

-

[email protected]replied to [email protected] last edited by

gaming can work on one GPU and display on another, thunderbolt egpus do it all the time.

-

I believe it is likely that there will be a burst at some point, just as with the dot-com burst.

But I think many people wrongly think that it will be the end of or a major setback for AI.

I see no reason why in twenty years AI won't be as prevalent as "dot-com's" are now.

-

Computer hardware isn't like a cars engine, it doesn't get knackered after a 100,000 miles.

If the hardware has been working for the last ten years, there's every chance it will go another ten years. Hardware is at much more risk of becoming outdated than it is of becoming worn out.

-

It'll be no worse than the dot-com burst.