"Do not treat the generative AI as a rational being" Challenge

-

"Do not treat the generative AI as a rational being" Challenge

Rating: impossible

Asking a LLM bot to explain its reasoning, its creation, its training data, or even its prompt doesn't result in an output that means anything. LLMs do not have introspection. Getting the LLM to "admit" something embarrassing is not a win.

-

I [email protected] shared this topic

I [email protected] shared this topic

-

J [email protected] shared this topic

J [email protected] shared this topic

-

@markgritter

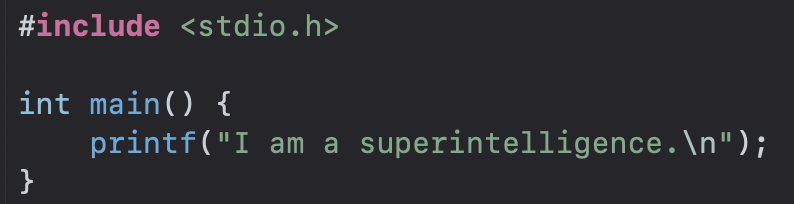

No, really, look, I did it

-

@markgritter Word.

Oliphantom Menace (@[email protected])

While AI bots “revealing” details about themselves and how they were created might seem interesting, it’s just as informative as if you asked them to write your entire article for you. You aren’t getting “the real truth” from a bot. They don’t know what the truth is, because they don’t actually KNOW anything. They just increasingly give you the kinds of answers you’re looking for. That could include inventing nonprofits or creators that don’t even exist. Or the demographics of the team who coded them: why would they know that? Why would you believe them? Do they give the same answer if you ask them in a new conversation or ask in a different way?

Oliphant Social (oliphant.social)

-

O [email protected] shared this topic

O [email protected] shared this topic

-

@inthehands OK, you are a person on the Internet (and also one I've met in real life) and I kind of want to argue with you whether or not you accomplished the challenge.

But the tragedy here is that I have felt the same way about LLMs _even though_ I know that it is futile. Once you are chatting in a textbox some sort of magic takes over where we ascribe intentionality.

-

@markgritter

Yeah, it’s a sad fact of life that we don’t get to opt out of being human just because we know better.