@slightlyoff once we got that work to less than 2s, the tool was able to start correctly identifying the LCP. So we were getting a more correct measurement for LCP, but for people only looking at dashboards it made our work look pointless.

Posts

-

Come for the frank discussion of the limiting factors to the web's longevity, stay for the incontrovertible evidence that Next.js suuuuuuuuuuuuuuuucks -

Come for the frank discussion of the limiting factors to the web's longevity, stay for the incontrovertible evidence that Next.js suuuuuuuuuuuuuuuucks@slightlyoff like I have to look after a Next.js site, and we were using catchpoint to at least firefight performance regressions. We did a lot of work to get a fix out, just to find our LCP score jumped in the wrong direction. Turns out the site was so bad, it hadn't sent a network request in 2 seconds as it ploughed through the mass of JS we were sending, and the tool was taking that as the site had finished loading, so took the LCP from before that finished.

-

Come for the frank discussion of the limiting factors to the web's longevity, stay for the incontrovertible evidence that Next.js suuuuuuuuuuuuuuuucks@slightlyoff yeah, survivor bias if you will; but am not convinced its just that. And to be clear I wasn't accusing them of a VW dieselgate type situation either. Just that I've seen some performance tools get messed up by really bad sites and report incorrectly.

-

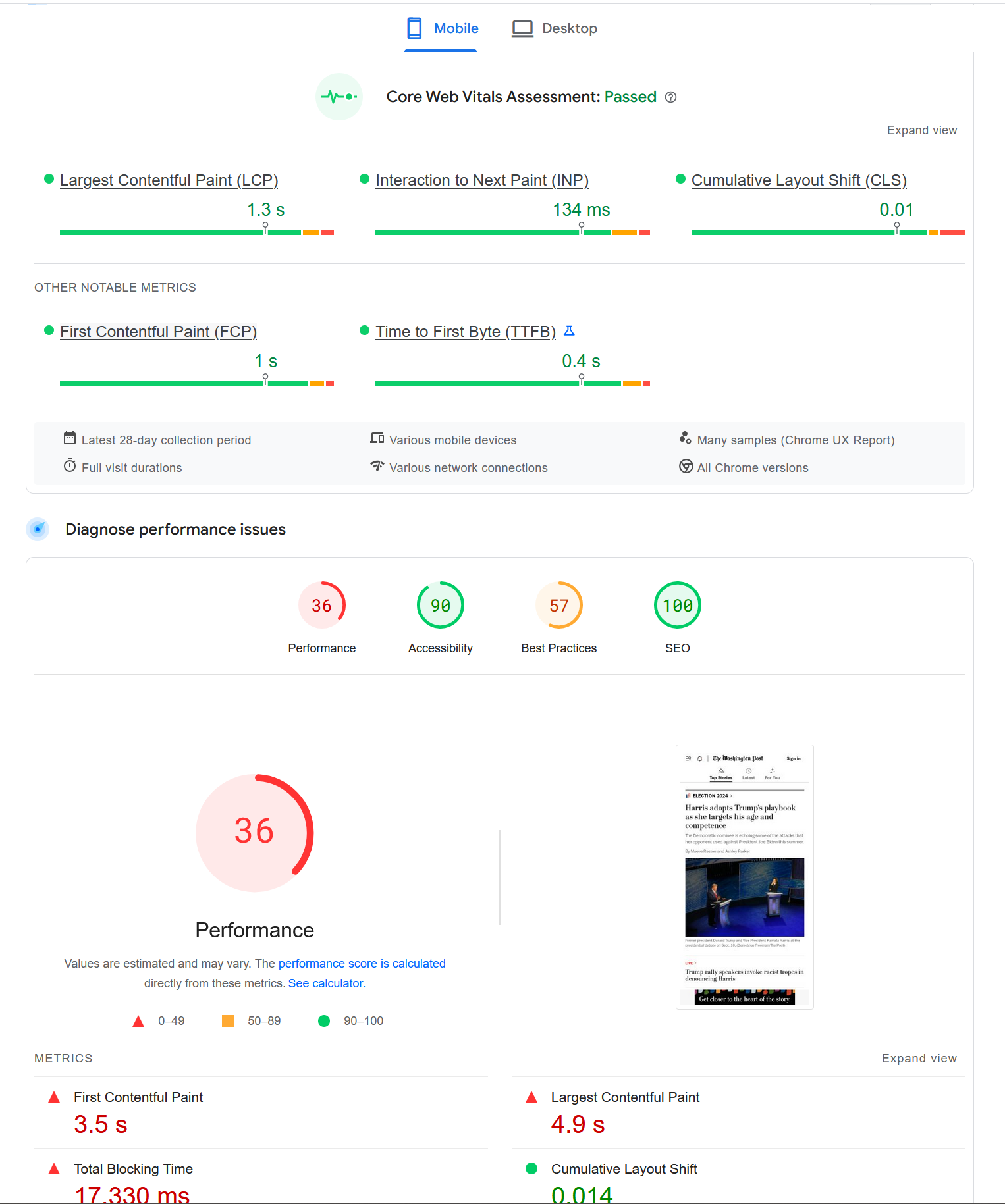

Come for the frank discussion of the limiting factors to the web's longevity, stay for the incontrovertible evidence that Next.js suuuuuuuuuuuuuuuucks@slightlyoff its weird seeing washingtonpost.com as all green in your footnotes. When I manually lighthouse them, their "field" crux is good, but the actual report is pretty bad.