For the first time the #CoSocialCa Mastodon server has started to struggle just a little bit to keep up with the flow of the Fediverse.

-

Staging server only has 2 GB of RAM but it also has virtually no queue activity so let’s give it a shot.

Having confirmed that we have sufficient resources to accommodate the increase and then picked a number out of hat, I’m going to increase the number of threads to 40.

6/?

-

No signs of trouble. Everything still hunky-dory in staging.

On to production.

If this is the last post you read from our server then something has gone very wrong.

7/?

-

@virtuous_sloth Only thing I can figure is that we’ve taken on a bunch of new followers as a server and tipped across some threshold? Or the users we follow are more chatty of late?

It did start when Eurovision began, but as a small server of Canadians idk how much Eurovision discussion we were plugged into.

A few more threads ought to help. If not, I’ll delve deeper.

-

Aaaand we’re good.

I’ll keep an eye on things over the next days and week and see if this has any measurable impact on performance one way or the other.

And that’s enough recreational server maintenance for one Friday night.

8/?

-

@mick @thisismissem so the thing that has me thinking about this is I was using activitypub.academy to view some logs. I did a follow to my server and it showed that my server continually sent duplicate "Accept" messages back. I can't tell if that's an issue with my server or with the academy. Because I can't see my logs.

-

@mick @thisismissem people told me that activity pub was very "chatty". I understand a lot better why that is now. But I now suspect that there's also a ton of inefficiency there. Because few people are looking at the actual production behavior.

-

-

@thisismissem @mick one thing I know is a problem is retries that build up in sidekiq. Sidekiq will retry jobs basically forever. And when server disappear, their jobs sit in the retry queue failing indefinitely. I'm sure larger instances with infra teams do some cleanup hear. But how are smaller instances supposed to learn about this?

-

-

@[email protected] that's definitely a problem with the sidekiq configuration then. Keeping retries forever just clogs up the queue... I can't imagine they'd be kept forever!

-

@thisismissem @mick where? Are they configurable? And again, how would I know? Is the recommended support channel complaining in mastodon until somebody tells you something that you can’t even verify?

-

@thisismissem @mick all I’m really saying is that even if I wanted to get more knowledgeable and make some decisions about how my server works, I don’t even have the kind of visibility that would facilitate making good decisions.

-

@polotek @mick @thisismissem what happened to the attempt to wire Mastodon up with OpenTelemetry? This kind of thing is what honeycomb.io is really good at exploring

-

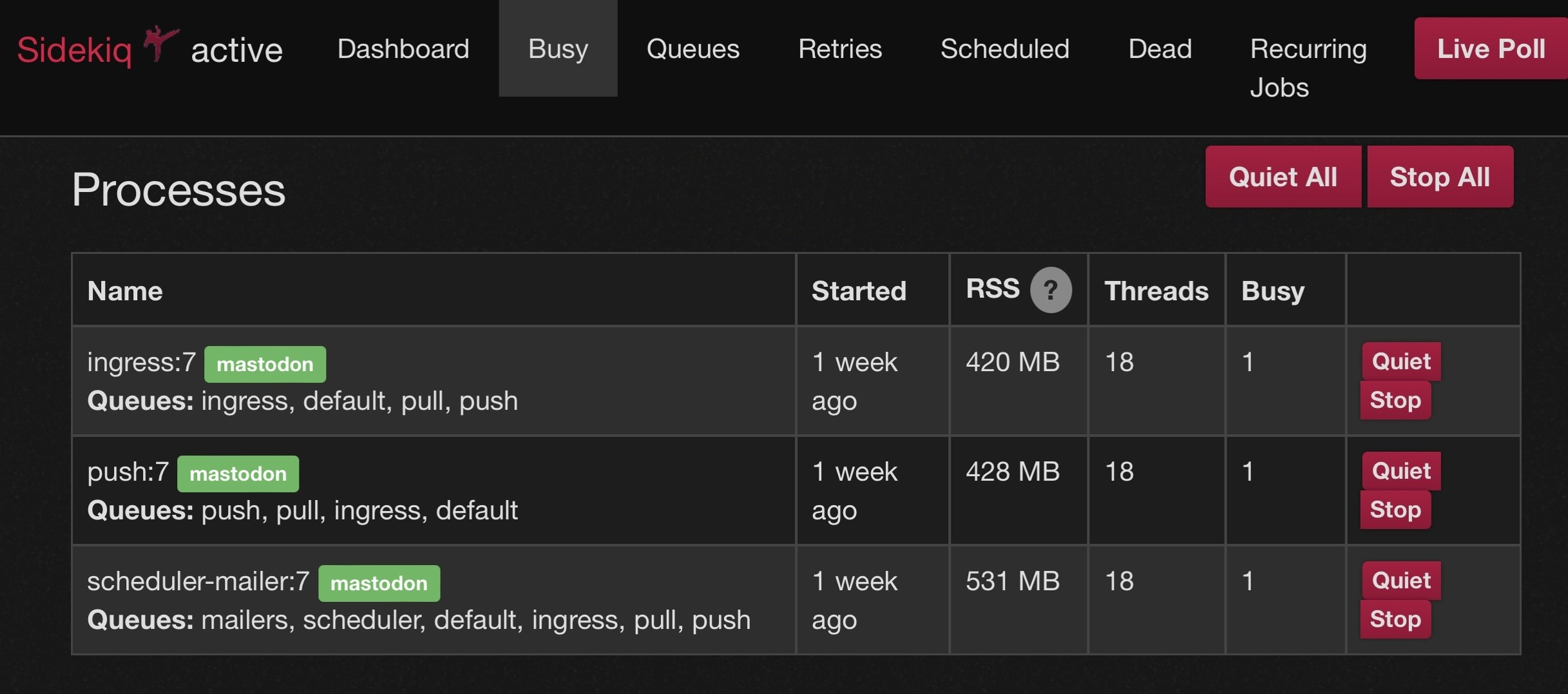

@mick @virtuous_sloth If you run multiple sidekiqs, you can have a priority/ordwring of queues. So instead of growing the size of a single sidekiq you can have 2 or 3 smaller ones with the same total threads, but different orders of queues. This allows all of the sidekiqs to work on a temporarily large push or pull queue but makes sure that every queue is getting attention. Just be sure that “scheduler” only appears in one of the multiple sidekiq instances.

Example:

-

@KevinMarks @polotek @mick I think it's still in progress

-

They mention in https://blog.joinmastodon.org/2024/05/trunk-tidbits-april-2024/ that they're trying to get OpenTelemetry into 4.3

-

@polotek @thisismissem I feel this. There’s very little clear documentation that describes how to even go about getting a clear picture of how queues are performing over time.

That’s sorta why I started this thread. A lot of what I’ve figured out about running a server has come from the community of #MastoAdmin figuring it out in public.

-

@KevinMarks @polotek @thisismissem thanks for this, will check it out.

-

@bplein @virtuous_sloth that’s where we’re headed.

Right now trying to ”make number bigger and see what happens.”

-

@mick @virtuous_sloth Here are my 3 commands to run it the way I do (I am doing mine from docker-compose but have stripped out that part). Many people have done this using systemd but that’s beyond my ability to help (because: docker)

bundle exec sidekiq -c 18 -q ingress -q default -q pull -q push

bundle exec sidekiq -c 18 -q push -q pull -q ingress -q default

bundle exec sidekiq -c 18 -q mailers -q scheduler -q default -q ingress -q pull -q push